Recently text-based sentiment prediction has been extensively studied, while image-centric sentiment analysis receives much less attention. In this paper, we study the problem of understanding human sentiments from large-scale social media images, considering both visual content and contextual information, such as comments on the images, captions, etc. The challenge of this problem lies in the " semantic gap " between low-level visual features and higher-level image sentiments. Moreover, the lack of proper annotations/labels in the majority of social media images presents another challenge. To address these two challenges, we propose a novel Unsupervised SEntiment Analysis (USEA) framework for social media images. Our approach exploits relations among visual content and relevant contextual information to bridge the " semantic gap " in the prediction of image sentiments. With experiments on two large-scale datasets, we show that the proposed method is effective in addressing the two challenges.

| negative (Flickr) | neutral (Flickr) | positive (Flickr) |

|

|

|

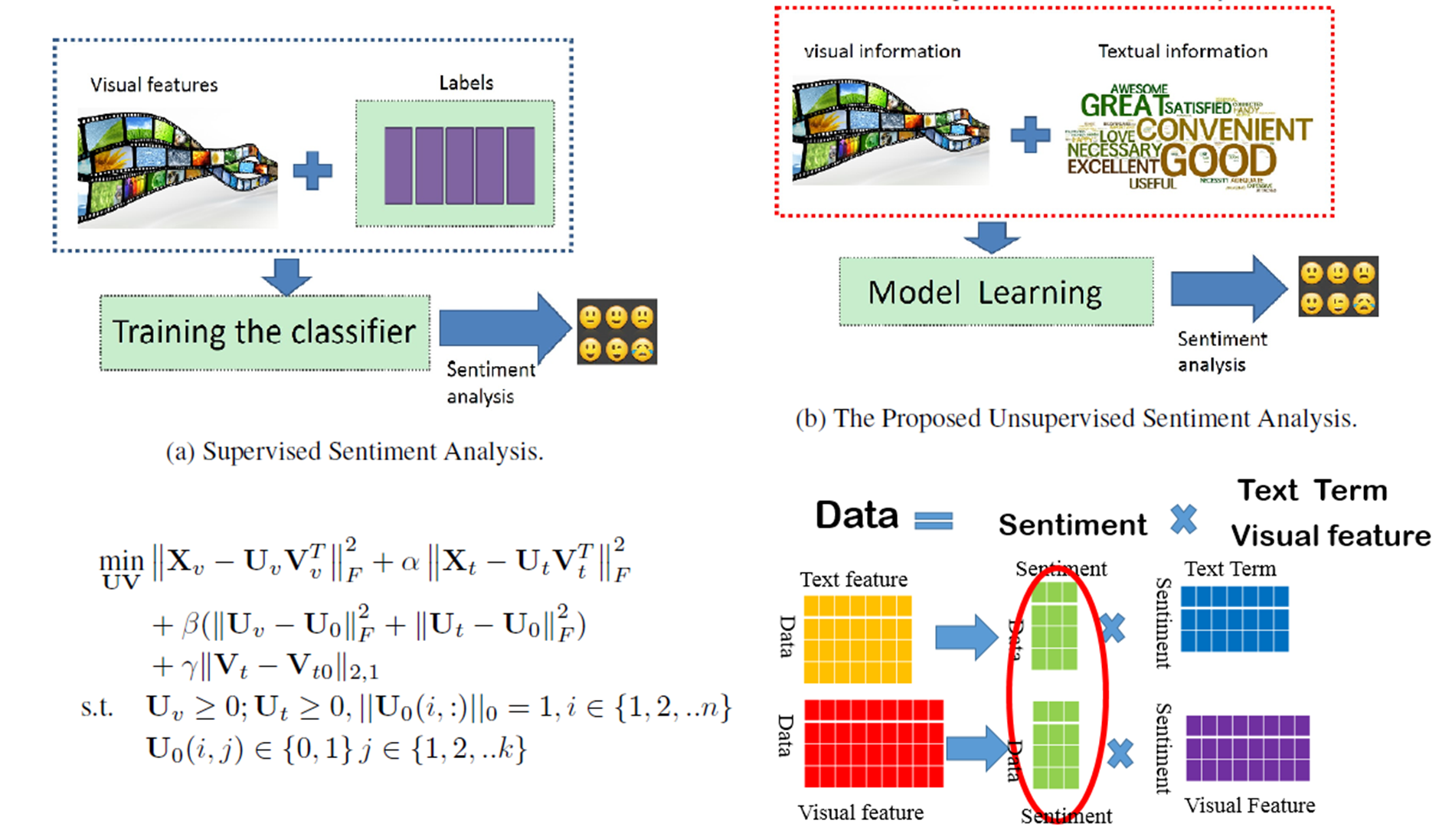

Background: Current methods of sentiment analysis for social media images include low-level visual feature based approaches, mid-level visual feature based approaches, and deep learning based approaches. The vast majority of existing methods are supervised, relying on labeled images to train sentiment classifiers. Unfortunately, sentiment labels are in general unavailable for social media images, and it is too labor- and time-intensive to obtain labeled sets large enough for robust training. In order to utilize the vast amount of unlabeled social media images, an unsupervised approach would be much more desirable. This paper studies unsupervised sentiment analysis.

Challenges: Typically, visual features such as color histogram, brightness, the presence of objects and visual attributes lack the level of semantic meanings required by sentiment prediction. In supervised case, label information could be directly utilized to build the connection between the visual features and the sentiment labels. Thus, unsupervised sentiment analysis for social media images is inherently more challenging than its supervised counterpart.

In this paper, we study unsupervised sentiment analysis for social media images with textual information by investigating two related challenges: (1) how to model the interaction between images and textual information systematically so as to support sentiment prediction using both sources of information, and (2) how to use textual information to enable unsupervised sentiment analysis for social media images. In addressing these two challenges, we propose a novel Unsupervised SEntiment Analysis (USEA) framework, which performs sentiment analysis for social media images in an unsupervised fashion. Figure 1 schematically illustrates the difference between the proposed unsupervised method and existing supervised methods. Supervised methods use label information to learn a sentiment classifier; while the proposed method does not assume the availability of label information but employ auxiliary textual information. Our main contribution can be summarized as below:

Yilin Wang, Suhang Wang, Jiliang Tang, Huan Liu, Baoxin Li. "Unsupervised Sentiment Analysis for Social Media Images". In IJCAI, 2015. [paper] [bibtex]

@InProceedings{Wangy-etal15a,

author = {Yilin Wang and Suhang Wang and Jiliang Tang and Huan Liu and Baoxin Li},

title = {Unsupervised Sentiment Analysis for Social Media Images},

booktitle = {International Joint Conference on Artificial Intelligence (IJCAI)},

month = {June},

year = {2015}

}

USEA (you see) download

Slides download

Poster download